A learning program, predictive text is supposed to be able to contextualize based on what form of communication (i.e. text or email) and who you are communicating with. So for your mom or your boss you can sound a little more polite and for your best friend, your secret slang language is ready to go. Fun and kind of handy, right? So what does this have to do with sexism and racism?

Sexism and racism built into your phone? Maybe, particularly if you have an iPhone.

The Apple-made product is equipped, as most smartphones are, with a predictive text feature. A step beyond autocorrect, the predictive text makes suggestions as to what word you are typing and then what word you want to come next. It’s a handy feature, designed to help us all text just a little bit faster. Convenient and sometimes a bit entertaining, Apple claims that the iOS8 predictive text may change the way you type:

“Now you can write entire sentences with a few taps. Because as you type, you’ll see choices of words or phrases you’d probably type next, based on your past conversations and writing style. iOS 8 takes into account the casual style you might use in Messages and the more formal language you probably use in Mail. It also adjusts based on the person you’re communicating with, because your choice of words is likely more laid back with your spouse than with your boss. Your conversation data is kept only on your device, so it’s always private.”

- Apple

A learning program, predictive text is supposed to be able to contextualize based on what form of communication (i.e. text or e-mail) and whom you are communicating with. So for your mom or your boss, you can sound a little more polite and for your best friend, your secret slang language is ready to go.

Fun and kind of handy, right? So what does this have to do with sexism and racism?

A lot, it turns out. Some of the predictive text is based on what it has learned from your personal texting habits, but it has to start somewhere. Apple has programed the iOS8 with certain algorithms to get the predictive text started and words you don’t use often still fall back on these algorithms. The suggested words are ones Apple thought users would most likely use, word suggestions that seem to just make sense or even go together. No surprise, Apple’s algorithm is pretty solid with the recommendations and I find myself using them fairly regularly.

It appears that Apple finds sexism and racism is a bit… well, predictable. Even in texting. At least, that’s what it seems to be with Apple’s program.

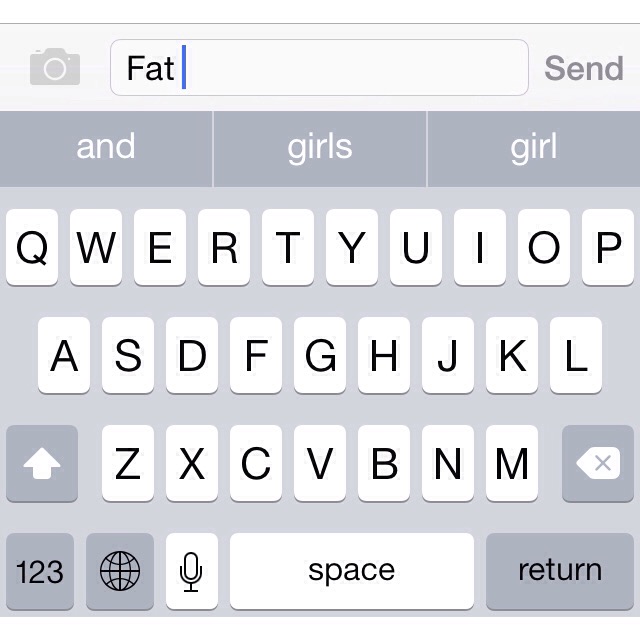

Type in “Fat” and just as soon as you hit space, three words will pop up as suggestions: and, girls, and girl.

Because, obviously, we are only going to be talking about fat in reference to girls.

When my friend Julie Dollarhide from Hideadollar Yoga first discovered this on her phone, she wondered if maybe it was a fluke. She asked on Facebook and several of her friends tried it out with the same results. That’s when she texted me. I too asked around and sure enough, every one of my friends with an iPhone had the same results.

Fat shaming and sexism in two little predictive text suggestions.

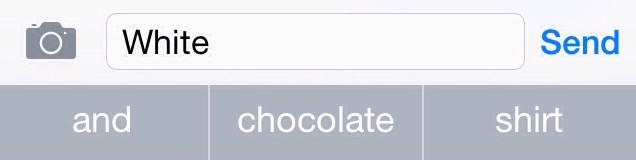

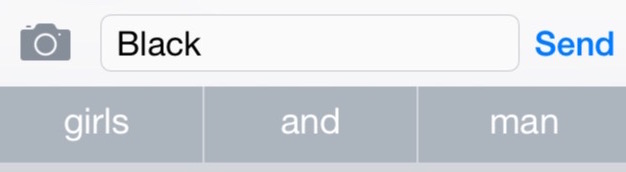

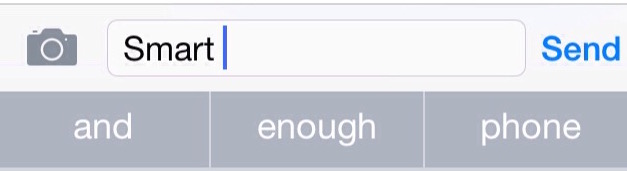

Our curiosity piqued, we began experimenting with different words and what we discovered was interesting. According to Apple’s predictive text, when users type the word “black,” the top three words they’re likely to use next are girls, and, and man (sometimes screen), but for “white” they’ll be looking for and, chocolate, and shirt (sometimes screen). “Short” pulls up girls, and, and time; “tall” gives us guys, girls, and and. Interestingly, while fat can be expected as an adjective for young females (girl/girls), Apple suggests day, graphics, and morning for “beautiful” and and, enough, and phone for “smart” Evidently Apple thinks it is normal for people to talk about girls being fat than being beautiful or smart.

Speaking of smart...Apple, maybe not so much.

We checked with our friends with other phones, Windows and Android options are different. Girl and girls aren’t even suggested in the five words in the predictive text window when the word “fat” is typed in on a Windows phone. This is just a serious metadata problem for Apple. They need to fix it.

It’s not all bad, many of the word suggestions just seem to make sense, filling in with common phrases or natural progression, they seem logical. So why this? Why does Apple think "fat" is going to most likely be followed by girls or girl? Or "black" by girls or man, but "white" by chocolate or shirt? Is this just a reflection of our culture? Does fat-shaming, sexism, and racism so completely infiltrate every aspect of our interactions that we need to predict it in our word suggestions for texting?

More importantly, if it is normal, why would we just accept that? The other smartphone companies haven’t. If Windows can suggest words that don’t assume fat-shaming, sexism, and racism to be expected, why did Apple?

I don’t know and I don’t have any answers but I do know that, as an iPhone owner who is ready to upgrade my phone, I’m going to be asking Apple to change this right away. It has been a long and conscientious process to challenge my own privilege and assumptions about size, sexism, and racism, and I certainly don’t want my phone to be predicting that it would be normal for me to revert back. There are a lot of issues with the company, not the least of which are their manufacturing practices and labor concerns. While it may not seem that the words their predictive text program suggests are a major cause of concern, the reality is little things like this continue to send messages that promote, normalize, and expect sizism, sexism, and racism. As consumers, we can stand up and say this isn’t what we want and chip away at the systematic expressions of oppression. These issues are so pervasive in our societies, let’s reject the idea that we need predictive text programs encouraging such mindsets.

To tell Apple that is isn't acceptable, sign the change.org petition here.